System and Architectures for Visual Computing

Joint Tutorial and Workshop Co-located with ISCA 2024

Merged with the EVGA Workshop

9:00 AM — 17:30 PM, June 30, 2024 (Alerce)

Join the Zoom Livestream

Why This Event?

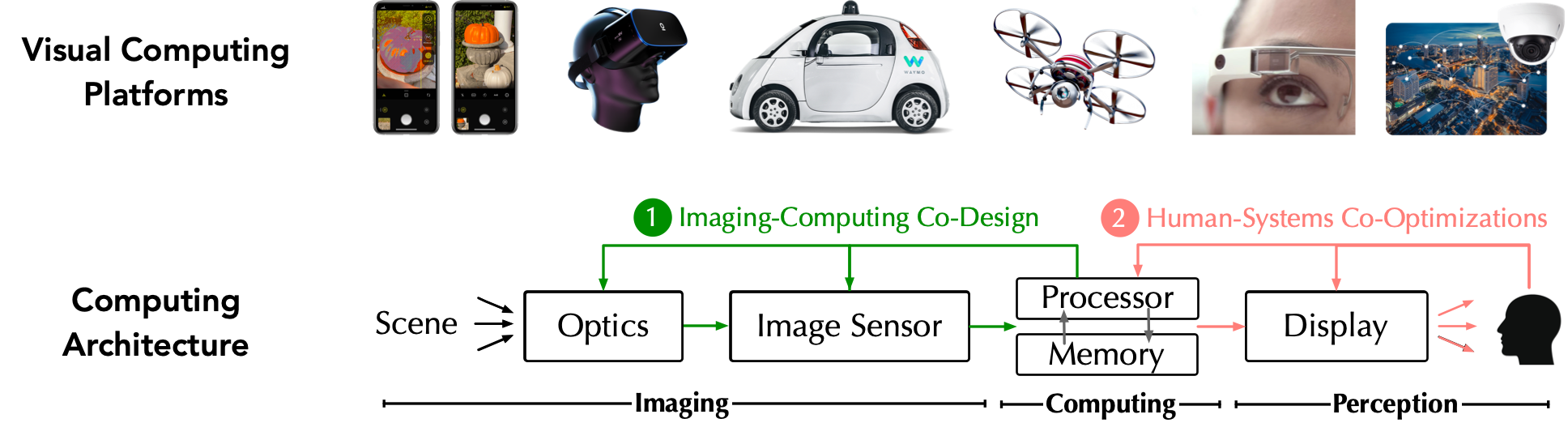

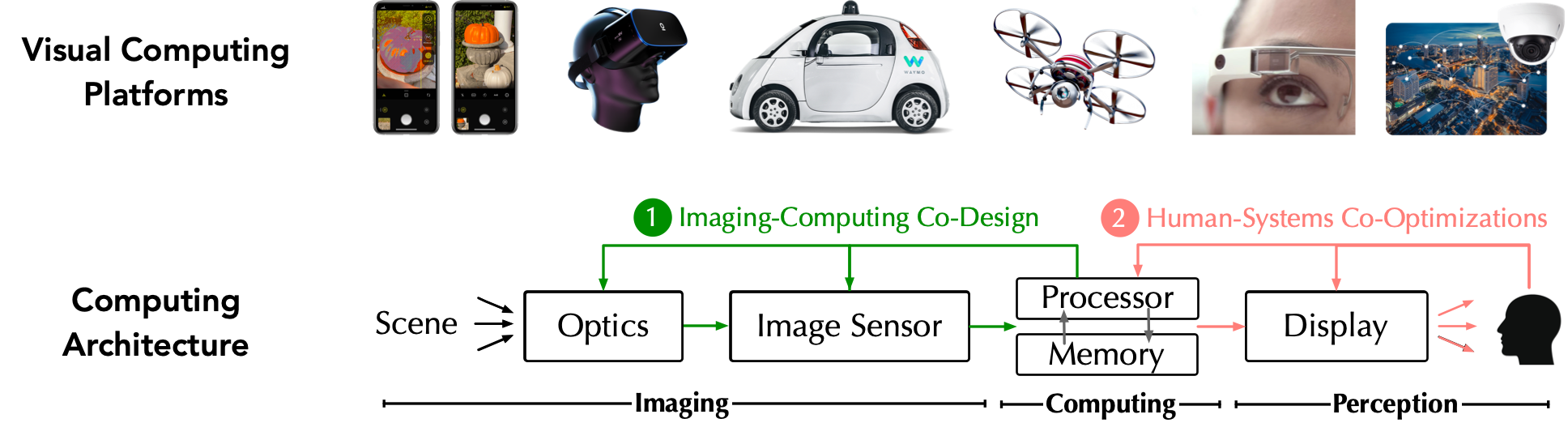

Emerging platforms such as Augmented Reality (AR), Virtual Reality (VR), and autonomous machines, while are of a computing nature, intimately interact with both the environment and humans. They must be built, from the ground up, with principled considerations of three main components: imaging, computer systems, and human perception.

The goal of this tutorial is to set up CS researchers for the exciting era of human-centered visual computing, where we can both 1) leverage human perception for enhancing computing and imaging systems, and 2) develope imaging and computational tools that advance our understanding of human perceptions and, ultimately, augment human perception.

To that end, this tutorial will 1) teach the basics of visual computing from first principles, including imaging (e.g., optics, image sensors) visual content, computing on visual content, displays, and human perception and cognition, and 2) discuss exciting research opportunities for the systems and architecture community.

The tutorial is not tied to a specific product or hardware platform. Instead, we focus on teaching the fundamental principles so that participants can walk away being able to design new algorithms, building new applications, and engineering systems and hardware for those algorithms and applications.

Tutorial (9:00 AM — 12:30 AM)

Lunch Break (12:30 PM — 14:00 PM)

Workshop (14:00 PM — 17:40 PM)

The videos of all the talks are available here

Reference Materials